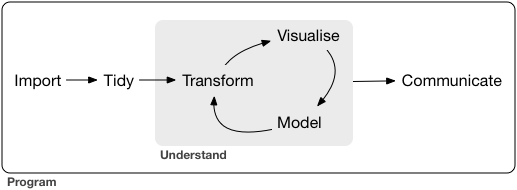

class: center, middle, inverse, title-slide # A whirlwind tour of working with data in R ### Paul Campbell<br>Culture of Insight ### <br>October 2018 --- class: inverse, middle, center # Why R? <base target="_blank"> --- # R .pull-left[ - R is a programming language built for data analysis and visualisation - It's 'open-source' which means it is completely free to use - Developed and maintained by an active not-for-profit team of software engineers and developers - This means it is always growing and improving with over 11,000 R 'packages' freely available to use - Highly flexible - adapt to suit your own needs without the constraints of proprietary software - Community - welcoming community of R users online (#rstats) always happy to help and make it fun for you to learn ] .pull-right[  ] --- # R vs Excel .pull-left[ But I'm already an Excel wizard, why do I need R? - As the importance and abundance of data increases, so does the complexity of the data tasks we're asked to fulfill - Doing complex tasks with large data volumes in Excel is...Difficult! - Learning to programme these tasks with R will: * reduce human error * allow you to work with larger datasets * make your analysis more transparent and reproducible * automate the boring repetitive parts * save you **so much time** ] .pull-right[  Source: [Gordon Shotwell](https://blog.shotwell.ca/2017/02/02/r-for-excel-users/) ] ??? Excel has long been the industry-standard tool for working with data but it's limitations are becoming more and more apparent as data volumes increase as well as the demand for accuracy and reproducibility in data analysis --- # R vs Python But isn't python the best for this type of stuff? .pull-left[ - Python is also a great language for data analysis, but it's scope extends way beyond this and is generally better suited to people with computer science backgrounds - If you're interested in learning to code with the end goal of harnessing AI and deploying machine learning models in large-scale production environments - choose python! - If your primary objective is to get better at manipulating and visualisation data, and communicating the results in reports and interactive dashboards - R is your new best friend (examples to come) ] .pull-right[  ] --- class: inverse, middle, center # The R Workflow  Source: [R for Data Science](http://r4ds.had.co.nz) --- class: inverse, middle, center # Importing --- # Importing Data .pull-left[ R can import data from just about every form imaginable: - Flat files like plain text and delimited data: .txt, .csv, .tsv - Data files from proprietary software like MS Excel, SPSS, SAS - Directly from Databases: MySQL, MS SQL Server, PostgreSQL - Directly from the Web: APIs, JSON, Webscrape HTML ] .pull-right[  ] --- # Importing Data - CSV ```r library(tidyverse) # load full suite of packages for data analysis london_crime <- read_csv("data/london_crime.csv") # import london violent crime data london_crime # preview the data ``` ``` ## # A tibble: 32 x 19 ## Code Borough `1999-00` `2000-01` `2001-02` `2002-03` `2003-04` ## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 E09000~ Barking and ~ 19.8 22.0 23.8 26.4 29.5 ## 2 E09000~ Barnet 13.5 14.5 15.2 16.4 18.1 ## 3 E09000~ Bexley 14.5 14.7 15.3 16.2 18.4 ## 4 E09000~ Brent 20.8 21.8 21.1 23.3 27.5 ## 5 E09000~ Bromley 10.9 12.9 13.0 15.9 16.8 ## 6 E09000~ Camden 28.1 27.2 27.0 28.5 31.4 ## 7 E09000~ Croydon 17.7 18.9 18.5 22.8 23.6 ## 8 E09000~ Ealing 22.0 21.1 22.3 23.1 23.2 ## 9 E09000~ Enfield 14.8 17.4 18.3 19.9 18.2 ## 10 E09000~ Greenwich 29.4 29.4 28.8 31.1 30.6 ## # ... with 22 more rows, and 12 more variables: `2004-05` <dbl>, ## # `2005-06` <dbl>, `2006-07` <dbl>, `2007-08` <dbl>, `2008-09` <dbl>, ## # `2009-10` <dbl>, `2010-11` <dbl>, `2011-12` <dbl>, `2012-13` <dbl>, ## # `2013-14` <dbl>, `2014-15` <dbl>, `2015-16` <dbl> ``` --- # Importing Data - Multiple Excel Sheets to 1 Data Frame .center[ You might often have data split across several tabs in an excel file like below. <img src="static/imgs/gapminder_messy.png" alt="excel tabs" width="60%"/> We can quickly combine all this data into one data table with a small amount of R code... ] --- # Importing Data - Multiple Excel Sheets to 1 Data Frame .pull-left[ ```r library(readxl) # for reading excel files path <- "data/gapminder_messy.xlsx" combined_data <- excel_sheets(path) %>% map_df(~ { read_excel(path, sheet = .x, skip = 4, trim_ws = TRUE) %>% mutate(year = as.numeric(.x)) }) %>% select(country, year, everything()) # show top and bottom 6 rows of data frame head(combined_data) tail(combined_data) ``` ] .pull-right[ ``` ## # A tibble: 6 x 5 ## country year gdpPercap lifeExp pop ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 Afghanistan 2007 975 43.8 31889923 ## 2 Albania 2007 5937 76.4 3600523 ## 3 Algeria 2007 6223 72.3 33333216 ## 4 Angola 2007 4797 42.7 12420476 ## 5 Argentina 2007 12779 75.3 40301927 ## 6 Australia 2007 34435 81.2 20434176 ``` ``` ## # A tibble: 6 x 5 ## country year gdpPercap lifeExp pop ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 Venezuela 1952 7690 55.1 5439568 ## 2 Vietnam 1952 605 40.4 26246839 ## 3 West Bank and Gaza 1952 1516 43.2 1030585 ## 4 Yemen, Rep. 1952 782 32.5 4963829 ## 5 Zambia 1952 1147 42.0 2672000 ## 6 Zimbabwe 1952 407 48.5 3080907 ``` ] --- # Importing Data - SPSS You don't have to pay expensive SPSS licenses to analyse your survey data! ```r library(haven) # for reading SPSS, SAS, STATA files survey_data <- read_sav("data/sample_spss_data.sav") head(survey_data) ``` ``` ## # A tibble: 6 x 682 ## SubsID responseid respid dCOUNTRY fQ1bGender foQ1aAge_1 dAge Q2Kids_1 ## <chr> <dbl> <dbl> <dbl+lb> <dbl+lbl> <dbl> <dbl+> <dbl> ## 1 218884~ 345 347 1 1 68.0 3 2.00 ## 2 235864~ 185 187 1 1 51.0 2 2.00 ## 3 237393~ 108 110 1 2 57.0 3 2.00 ## 4 250156~ 524 526 1 2 53.0 2 2.00 ## 5 254053~ 349 351 1 2 25.0 1 2.00 ## 6 200042~ 823 825 1 2 54.0 2 1.00 ## # ... with 674 more variables: Q2Kids_2 <dbl>, Q2Kids_3 <dbl>, ## # dKIDS <dbl+lbl>, Q3 <dbl+lbl>, dNET <dbl+lbl>, fQ2UKRegion <dbl+lbl>, ## # dRegionUK <dbl+lbl>, fQ2FRRegion <dbl+lbl>, dRegionFR <dbl+lbl>, ## # fQ2DERegion <dbl+lbl>, dRegionDE <dbl+lbl>, Q2TURRegion <dbl+lbl>, ## # Q2IncomeUK <dbl+lbl>, dIncomeUK <dbl+lbl>, Q2IncomeFR <dbl+lbl>, ## # dIncomeFR <dbl+lbl>, Q2IncomeDE <dbl+lbl>, dIncomeDE <dbl+lbl>, ## # Q2IncomeTUR <dbl+lbl>, dIncomeTUR <dbl+lbl>, Q4_1 <dbl+lbl>, ## # Q4_2 <dbl+lbl>, Q4_3 <dbl+lbl>, Q4_4 <dbl+lbl>, Q4_5 <dbl+lbl>, ## # Q4_6 <dbl+lbl>, Q4_99 <dbl+lbl>, Q6_1 <dbl+lbl>, Q6_2 <dbl+lbl>, ## # Q6_3 <dbl+lbl>, Q6_4 <dbl+lbl>, Q6_5 <dbl+lbl>, Q6_98 <dbl+lbl>, ## # Q6_99 <dbl+lbl>, dBiscLMAll_1 <dbl+lbl>, dBiscLMAll_2 <dbl+lbl>, ## # dBiscLMAll_3 <dbl+lbl>, dBiscLMAll_4 <dbl+lbl>, ## # dBiscLMAll_5 <dbl+lbl>, dBiscLMAll_6 <dbl+lbl>, dTypLMAll_1 <dbl+lbl>, ## # dTypLMAll_2 <dbl+lbl>, dTypLMAll_3 <dbl+lbl>, dTypLMAll_4 <dbl+lbl>, ## # dNumber <dbl+lbl>, fQ13_1 <dbl+lbl>, fQ13_2 <dbl+lbl>, ## # fQ13_3 <dbl+lbl>, fQ13_4 <dbl+lbl>, fQ13_5 <dbl+lbl>, ## # fQ13_6 <dbl+lbl>, fQ13_7 <dbl+lbl>, fQ13_8 <dbl+lbl>, ## # fQ13_9 <dbl+lbl>, fQ13_10 <dbl+lbl>, fQ13_11 <dbl+lbl>, ## # fQ13_12 <dbl+lbl>, fQ13_13 <dbl+lbl>, fQ13_14 <dbl+lbl>, ## # fQ13_15 <dbl+lbl>, fQ13_16 <dbl+lbl>, fQ13_17 <dbl+lbl>, ## # fQ13_18 <dbl+lbl>, fQ13_19 <dbl+lbl>, fQ13_20 <dbl+lbl>, ## # fQ13_21 <dbl+lbl>, fQ13_22 <dbl+lbl>, fQ13_23 <dbl+lbl>, ## # fQ13_24 <dbl+lbl>, fQ13_25 <dbl+lbl>, fQ13_26 <dbl+lbl>, ## # fQ13_27 <dbl+lbl>, fQ13_28 <dbl+lbl>, fQ13_29 <dbl+lbl>, ## # fQ13_30 <dbl+lbl>, fQ13_31 <dbl+lbl>, fQ13_32 <dbl+lbl>, ## # fQ13_33 <dbl+lbl>, fQ13_34 <dbl+lbl>, fQ13_35 <dbl+lbl>, ## # fQ13_36 <dbl+lbl>, fQ13_37 <dbl+lbl>, fQ13_38 <dbl+lbl>, ## # Q25A_1 <dbl+lbl>, Q25A_2 <dbl+lbl>, Q25A_3 <dbl+lbl>, ## # Q25A_4 <dbl+lbl>, Q25A_5 <dbl+lbl>, Q25A_6 <dbl+lbl>, ## # Q25A_7 <dbl+lbl>, Q25A_8 <dbl+lbl>, Q25A_9 <dbl+lbl>, ## # Q25A_10 <dbl+lbl>, Q25A_11 <dbl+lbl>, Q25A_12 <dbl+lbl>, ## # Q25A_13 <dbl+lbl>, Q25A_14 <dbl+lbl>, Q25A_15 <dbl+lbl>, ## # Q25A_16 <dbl+lbl>, Q25A_95 <dbl+lbl>, ... ``` --- # Importing Data - SPSS ```r # convert spss label codes into labels labelled_survey_data <- as_factor(survey_data) head(labelled_survey_data) ``` ``` ## # A tibble: 6 x 682 ## SubsID responseid respid dCOUNTRY fQ1bGender foQ1aAge_1 dAge Q2Kids_1 ## <chr> <dbl> <dbl> <fct> <fct> <dbl> <fct> <dbl> ## 1 21888465 345 347 UK Male 68.0 55+ 2.00 ## 2 23586407 185 187 UK Male 51.0 35-54 2.00 ## 3 23739306 108 110 UK Female 57.0 55+ 2.00 ## 4 25015655 524 526 UK Female 53.0 35-54 2.00 ## 5 25405304 349 351 UK Female 25.0 16-34 2.00 ## 6 20004255 823 825 UK Female 54.0 35-54 1.00 ## # ... with 674 more variables: Q2Kids_2 <dbl>, Q2Kids_3 <dbl>, ## # dKIDS <fct>, Q3 <fct>, dNET <fct>, fQ2UKRegion <fct>, dRegionUK <fct>, ## # fQ2FRRegion <fct>, dRegionFR <fct>, fQ2DERegion <fct>, ## # dRegionDE <fct>, Q2TURRegion <fct>, Q2IncomeUK <fct>, dIncomeUK <fct>, ## # Q2IncomeFR <fct>, dIncomeFR <fct>, Q2IncomeDE <fct>, dIncomeDE <fct>, ## # Q2IncomeTUR <fct>, dIncomeTUR <fct>, Q4_1 <fct>, Q4_2 <fct>, ## # Q4_3 <fct>, Q4_4 <fct>, Q4_5 <fct>, Q4_6 <fct>, Q4_99 <fct>, ## # Q6_1 <fct>, Q6_2 <fct>, Q6_3 <fct>, Q6_4 <fct>, Q6_5 <fct>, ## # Q6_98 <fct>, Q6_99 <fct>, dBiscLMAll_1 <fct>, dBiscLMAll_2 <fct>, ## # dBiscLMAll_3 <fct>, dBiscLMAll_4 <fct>, dBiscLMAll_5 <fct>, ## # dBiscLMAll_6 <fct>, dTypLMAll_1 <fct>, dTypLMAll_2 <fct>, ## # dTypLMAll_3 <fct>, dTypLMAll_4 <fct>, dNumber <fct>, fQ13_1 <fct>, ## # fQ13_2 <fct>, fQ13_3 <fct>, fQ13_4 <fct>, fQ13_5 <fct>, fQ13_6 <fct>, ## # fQ13_7 <fct>, fQ13_8 <fct>, fQ13_9 <fct>, fQ13_10 <fct>, ## # fQ13_11 <fct>, fQ13_12 <fct>, fQ13_13 <fct>, fQ13_14 <fct>, ## # fQ13_15 <fct>, fQ13_16 <fct>, fQ13_17 <fct>, fQ13_18 <fct>, ## # fQ13_19 <fct>, fQ13_20 <fct>, fQ13_21 <fct>, fQ13_22 <fct>, ## # fQ13_23 <fct>, fQ13_24 <fct>, fQ13_25 <fct>, fQ13_26 <fct>, ## # fQ13_27 <fct>, fQ13_28 <fct>, fQ13_29 <fct>, fQ13_30 <fct>, ## # fQ13_31 <fct>, fQ13_32 <fct>, fQ13_33 <fct>, fQ13_34 <fct>, ## # fQ13_35 <fct>, fQ13_36 <fct>, fQ13_37 <fct>, fQ13_38 <fct>, ## # Q25A_1 <fct>, Q25A_2 <fct>, Q25A_3 <fct>, Q25A_4 <fct>, Q25A_5 <fct>, ## # Q25A_6 <fct>, Q25A_7 <fct>, Q25A_8 <fct>, Q25A_9 <fct>, Q25A_10 <fct>, ## # Q25A_11 <fct>, Q25A_12 <fct>, Q25A_13 <fct>, Q25A_14 <fct>, ## # Q25A_15 <fct>, Q25A_16 <fct>, Q25A_95 <fct>, ... ``` --- # Importing Data - SPSS Quickly get some summary stats about your respondents... ```r labelled_survey_data %>% count(fQ1bGender, dAge) %>% spread(fQ1bGender, n) %>% knitr::kable(format = "html") ``` <table> <thead> <tr> <th style="text-align:left;"> dAge </th> <th style="text-align:right;"> Male </th> <th style="text-align:right;"> Female </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> 16-34 </td> <td style="text-align:right;"> 510 </td> <td style="text-align:right;"> 596 </td> </tr> <tr> <td style="text-align:left;"> 35-54 </td> <td style="text-align:right;"> 664 </td> <td style="text-align:right;"> 612 </td> </tr> <tr> <td style="text-align:left;"> 55+ </td> <td style="text-align:right;"> 362 </td> <td style="text-align:right;"> 480 </td> </tr> </tbody> </table> --- # Importing Data - Databases ```r library(DBI) # for connecting to databases con <- dbConnect( drv = RMySQL::MySQL(), dbname = "shinydemo", host = "shiny-demo.csa7qlmguqrf.us-east-1.rds.amazonaws.com", username = "guest", password = "guest" ) dbListTables(con) ``` ``` ## [1] "City" "Country" "CountryLanguage" ``` --- # Importing Data - Databases ```r cities <- tbl(con, "City") cities ``` ``` ## # Source: table<City> [?? x 5] ## # Database: mysql 10.0.17-MariaDB ## # [guest@shiny-demo.csa7qlmguqrf.us-east-1.rds.amazonaws.com:/shinydemo] ## ID Name CountryCode District Population ## <dbl> <chr> <chr> <chr> <dbl> ## 1 1.00 Kabul AFG Kabol 1780000 ## 2 2.00 Qandahar AFG Qandahar 237500 ## 3 3.00 Herat AFG Herat 186800 ## 4 4.00 Mazar-e-Sharif AFG Balkh 127800 ## 5 5.00 Amsterdam NLD Noord-Holland 731200 ## 6 6.00 Rotterdam NLD Zuid-Holland 593321 ## 7 7.00 Haag NLD Zuid-Holland 440900 ## 8 8.00 Utrecht NLD Utrecht 234323 ## 9 9.00 Eindhoven NLD Noord-Brabant 201843 ## 10 10.0 Tilburg NLD Noord-Brabant 193238 ## # ... with more rows ``` --- # Web APIs Many R packages make it easy for you to pull publicly available data via an API (application programming interface). The code below retrieves data from the UK police database within 1 mile of the coordinates you provide for the latest available month. ```r library(ukpolice) # R wrapper to the UK Police API crime_data <- ukp_crime(lat = 51.533802, lng = -0.092715) head(crime_data) ``` ``` ## # A tibble: 6 x 12 ## category persistent_id date lat long street_id street_name context ## <chr> <chr> <chr> <dbl> <dbl> <chr> <chr> <chr> ## 1 anti-so~ "" 2018~ 51.5 -0.114 960854 On or near~ "" ## 2 anti-so~ "" 2018~ 51.5 -0.0826 961467 On or near~ "" ## 3 anti-so~ "" 2018~ 51.5 -0.0826 961467 On or near~ "" ## 4 anti-so~ "" 2018~ 51.5 -0.0820 968664 On or near~ "" ## 5 anti-so~ "" 2018~ 51.5 -0.101 961239 On or near~ "" ## 6 anti-so~ "" 2018~ 51.5 -0.0938 964580 On or near~ "" ## # ... with 4 more variables: id <chr>, location_type <chr>, ## # location_subtype <chr>, outcome_status <chr> ``` --- class: inverse, middle, center # Manipulation --- # Transforming Data .pull-left[  ] .pull-right[  ] https://github.com/gadenbuie/tidy-animated-verbs --- # Transforming Data ```r # data set with details of every tree in paris (1 row = 1 tree) trees_in_paris <- read_csv2("data/les-arbres.csv") colnames(trees_in_paris) # how's your french? ``` ``` ## [1] "IDBASE" "TYPEEMPLACEMENT" "DOMANIALITE" ## [4] "ARRONDISSEMENT" "COMPLEMENTADRESSE" "NUMERO" ## [7] "LIEU / ADRESSE" "IDEMPLACEMENT" "CIRCONFERENCEENCM" ## [10] "HAUTEUR (m)" "STADEDEVELOPPEMENT" "PEPINIERE" ## [13] "ESPECE" "VARIETEOUCULTIVAR" "GENRE" ## [16] "DATEPLANTATION" "REMARQUABLE" "LIBELLEFRANCAIS" ## [19] "OBJECTID" "geo_point_2d" ``` Can we answer the question... > How many trees does each Arrondissement have, and what century were they planted in? We only need 2 columns from the raw data to answer this... --- # Transforming Data ```r transformed_trees <- trees_in_paris %>% select(ARRONDISSEMENT, DATEPLANTATION) %>% # select only Arr. and Date Planted cols mutate(year = lubridate::year(DATEPLANTATION), # pull year number from full date century_planted = case_when( # year < 1700 ~ "17th", # year < 1800 ~ "18th", # year < 1900 ~ "19th", # code a century planted column year < 2000 ~ "20th", # based on year number year < 2018 ~ "21st", # is.na(year) ~ "Unknown" # ) # ) %>% # count(ARRONDISSEMENT, century_planted) %>% # count the number of trees by Arr. & Century group_by(ARRONDISSEMENT) %>% # group the data by Arr. add_tally(n) %>% # add column with total trees for each Arr. ungroup() %>% # ungroup the data spread(century_planted, n) %>% # spread centuries from rows to columns select(1, 3:8, Total = nn) %>% # reorder and rename columns arrange(desc(Total)) # sort data by total column ``` --- # Results... The 16th Arrondissement has the most trees in Paris! Most of which were planted in the 18th Century. ```r transformed_trees ``` ``` ## # A tibble: 25 x 8 ## ARRONDISSEMENT `17th` `18th` `19th` `20th` `21st` Unknown Total ## <chr> <int> <int> <int> <int> <int> <int> <int> ## 1 PARIS 16E ARRDT NA 13970 21 2154 2059 1301 19505 ## 2 PARIS 15E ARRDT NA 11910 25 3869 2198 974 18976 ## 3 PARIS 13E ARRDT NA 10528 1 2998 3972 1331 18830 ## 4 PARIS 20E ARRDT NA 12309 NA 1646 2316 1004 17275 ## 5 PARIS 19E ARRDT NA 10421 NA 1452 2353 990 15216 ## 6 PARIS 14E ARRDT NA 10752 2 1280 1858 560 14452 ## 7 PARIS 12E ARRDT NA 8602 17 2648 2231 672 14170 ## 8 PARIS 17E ARRDT NA 6414 3 1984 2557 1157 12115 ## 9 SEINE-SAINT-DENIS NA 11230 NA NA 285 254 11769 ## 10 PARIS 18E ARRDT NA 6116 NA 1941 2556 862 11475 ## # ... with 15 more rows ``` --- # Joining Data ```r co2 <- read.table("ftp://aftp.cmdl.noaa.gov/products/trends/co2/co2_annmean_mlo.txt") %>% as_tibble() %>% select(Year = V1, CO2 = V2) temp <- read.table("https://go.nasa.gov/2r8ryH1") %>% as_tibble() %>% select(Year = V1, `Global Land-Ocean Temperature Index` = V2) ``` .pull-left[ ``` ## # A tibble: 59 x 2 ## Year CO2 ## <int> <dbl> ## 1 1959 316 ## 2 1960 317 ## 3 1961 318 ## 4 1962 318 ## 5 1963 319 ## 6 1964 320 ## 7 1965 320 ## 8 1966 321 ## 9 1967 322 ## 10 1968 323 ## # ... with 49 more rows ``` ] .pull-right[ ``` ## # A tibble: 138 x 2 ## Year `Global Land-Ocean Temperature Index` ## <int> <dbl> ## 1 1880 -0.190 ## 2 1881 -0.100 ## 3 1882 -0.100 ## 4 1883 -0.190 ## 5 1884 -0.280 ## 6 1885 -0.310 ## 7 1886 -0.320 ## 8 1887 -0.350 ## 9 1888 -0.180 ## 10 1889 -0.110 ## # ... with 128 more rows ``` ] --- # Joining Data .pull-left[  ] .pull-right[ To look for a relationship between each dataset we want to join them but only keep years for which there is both CO2 and Global Temperature data. ```r joined_data <- inner_join(co2, temp, by = "Year") joined_data ``` ``` ## # A tibble: 59 x 3 ## Year CO2 `Global Land-Ocean Temperature Index` ## <int> <dbl> <dbl> ## 1 1959 316 0.0300 ## 2 1960 317 -0.0200 ## 3 1961 318 0.0600 ## 4 1962 318 0.0400 ## 5 1963 319 0.0700 ## 6 1964 320 -0.200 ## 7 1965 320 -0.100 ## 8 1966 321 -0.0500 ## 9 1967 322 -0.0200 ## 10 1968 323 -0.0700 ## # ... with 49 more rows ``` ] --- # Plot the Relationship... ```r ggplot(joined_data, aes(x = CO2, y = `Global Land-Ocean Temperature Index`)) + geom_point() + geom_smooth() + labs(caption = "Source: NOAA, NASA") ``` <img src="index_files/figure-html/unnamed-chunk-14-1.svg" style="display: block; margin: auto;" /> --- class: inverse, middle, center # Visualisation --- # ggplot2 .pull-left[ - Combine your data wrangling and chart making into one single process * no more pasting data from excel into powerpoint to make or change a chart! 🙌 - Makes it easy to quickly iterate over different ways of mapping your data to shapes, space and colour - Programming a chart with code forces you to think about what you are doing and why - Allows you to create completley unique charts by adding several different 'layers' of data - Not constrained by the chart types available in other software packages - Produce publication quality graphics just like... ] .pull-right[ <img src="https://d33wubrfki0l68.cloudfront.net/0ab849ed51b0b866ef6895c253d3899f4926d397/dbf0f/images/hex-ggplot2.png" alt="ggplot2 hex" width="60%" /> ] --- # Financial Times .pull-left[ <blockquote class="twitter-tweet" data-lang="en"><p lang="en" dir="ltr">Fun fact for the <a href="https://twitter.com/hashtag/dataviz?src=hash&ref_src=twsrc%5Etfw">#dataviz</a> crowd:<br><br>This chart (and the ones in the story) are the first we've done 100% in ggplot, right down to the custom <a href="https://twitter.com/FT?ref_src=twsrc%5Etfw">@FT</a> font and the white bar in the top left. <a href="https://t.co/BVFmoYX2WL">https://t.co/BVFmoYX2WL</a></p>— John Burn-Murdoch (@jburnmurdoch) <a href="https://twitter.com/jburnmurdoch/status/1006783615022391297?ref_src=twsrc%5Etfw">June 13, 2018</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script> ] .pull-right[ <img src="static/imgs/ft_ggplot.jpg" width="100%"/> ] --- # BBC <blockquote class="twitter-tweet" data-conversation="none" data-cards="hidden" data-lang="en"><p lang="en" dir="ltr">At the beeb we’re now doing a lot of charts solely in ggplot. The charts here for example <a href="https://t.co/bokQHQK6pj">https://t.co/bokQHQK6pj</a> . We’re doing maps too.</p>— Wesley Stephenson (@WesStephenson) <a href="https://twitter.com/WesStephenson/status/1006797669648470016?ref_src=twsrc%5Etfw">June 13, 2018</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script> .center[ <img src="static/imgs/bbc_ggplot.png" width="55%"/> ] --- # ggplot2 - Example ```r london_crime <- london_crime %>% # use london crime data previously imported gather(year, rate, 3:ncol(.)) # gather the year columns to key + value columns (wide to long data) london_crime ``` ``` ## # A tibble: 544 x 4 ## Code Borough year rate ## <chr> <chr> <chr> <dbl> ## 1 E09000002 Barking and Dagenham 1999-00 19.8 ## 2 E09000003 Barnet 1999-00 13.5 ## 3 E09000004 Bexley 1999-00 14.5 ## 4 E09000005 Brent 1999-00 20.8 ## 5 E09000006 Bromley 1999-00 10.9 ## 6 E09000007 Camden 1999-00 28.1 ## 7 E09000008 Croydon 1999-00 17.7 ## 8 E09000009 Ealing 1999-00 22.0 ## 9 E09000010 Enfield 1999-00 14.8 ## 10 E09000011 Greenwich 1999-00 29.4 ## # ... with 534 more rows ``` --- # ggplot2 - Example ```r plot_years <- unique(london_crime$year) p <- ggplot(london_crime, aes(year, rate, group = Borough)) + geom_line(colour = "SteelBlue") + scale_x_discrete(breaks = plot_years[seq(1, length(plot_years), by = 2)]) + labs(x = "Year", y = "Rate per Thousand Population", title = "Violent Crime Trends by London Boroughs", caption = "Source: data.gov.uk") p ``` <img src="index_files/figure-html/london_facet-1.svg" style="display: block; margin: auto;" /> --- # Facets .left-column[ ```r p + facet_wrap(~ Borough) + scale_x_discrete(breaks = NULL) + scale_y_continuous(breaks = c(10, 30, 50)) + labs(x = NULL, subtitle = "1999-00 - 2015-16") + theme(strip.text.x = element_text(size = 6)) ``` ] .right-column[ <img src="index_files/figure-html/london_facet2_out-1.svg" style="display: block; margin: auto;" /> ] --- # Geo Facets .left-column[ Switch `facet_wrap` to `facet_geo` with the `geofacet` package to arrange facets in a geographically ordered grid. ```r library(geofacet) # for geographically arranged facets p + facet_geo(~ Borough, grid = "london_boroughs_grid") + scale_x_discrete(breaks = NULL) + scale_y_continuous(breaks = c(10, 30, 50)) + labs(x = NULL, subtitle = "1999-00 - 2015-16") + theme(strip.text.x = element_text(size = 6)) ``` ] .right-column[ <img src="index_files/figure-html/london_facet3_out-1.svg" style="display: block; margin: auto;" /> ] --- # Joy Plots ```r library(ggridges) # for creating ridgeline plots of 'joy plots' a la joy division unknown pleasures ggplot(london_crime, aes(x = rate, y = factor(year) %>% fct_rev(), fill = ..x..)) + geom_density_ridges_gradient(scale = 3, rel_min_height = 0.01, colour = "white") + viridis::scale_fill_viridis(name = "Rate", option = "C") + labs(y = NULL, title = 'Distribution of Violent Crime Rate in London Boroughs', subtitle = "Rate per Thousand Population", caption = "Source: London Data Store") + theme_ft_rc() ``` --- # Joy Plots <img src="index_files/figure-html/jpy-plot-out-1.svg" style="display: block; margin: auto;" /> --- # Maps ```r library(sf) # for spatial analysis in a 'tidy' framework # join london borough shapefile to data and filter to latest year ldn_shape <- st_read("data/ldnb/London_Borough_Excluding_MHW.shp", quiet = TRUE) %>% st_transform(4326) %>% inner_join(london_crime, by = c("GSS_CODE" = "Code")) %>% filter(year == "2015-16") ldn_map <- ggplot(ldn_shape) + geom_sf(aes(fill = rate), colour = "#FAFAFA") + coord_sf(datum = NA) + scale_fill_viridis_c(name = "Rate per Thousand Population", guide = guide_colourbar(title.position = "top", barwidth = 13, barheight = 0.5)) + theme(legend.position = c(0.8, 1.05), legend.direction = "horizontal") + labs(title = "Violent Crime in London", subtitle = "2015-16", caption = "Source: London Data Store") ``` --- # Maps <img src="index_files/figure-html/unnamed-chunk-17-1.svg" style="display: block; margin: auto;" /> --- # Interactive Graphics As well as static graphics with `ggplot2`, [`htmlwidgets`](https://www.htmlwidgets.org/) bring the best of JavaScript data visualisation to R. A few lines of R code can produce rich interactive charts and maps that you can embed in reports and dashboards. For example, Plotly allows you to convert a static ggplot to an interactive graphics with one line! ```r # make the same london crime ggplot as before with added html tooltip aesthetic library(plotly) # R package for the plotly javascript library library(glue) # for glueing strings to data p <- ggplot(london_crime, aes(year, rate, group = Borough, text = glue("<b>{Borough}</b><br>Year: {year}<br>Rate: {round(rate, 2)}"))) + geom_line(colour = "SteelBlue") + scale_x_discrete(breaks = plot_years[seq(1, length(plot_years), by = 2)]) + labs(x = "Year", y = "Rate per Thousand Population", title = "Violent Crime Trends by London Boroughs") p_i <- ggplotly(p, tooltip = "text") # then convert to plotly js chart ``` Chart on the next slide! --- # ggplot2 -> Ploty JS <iframe src="plotly_example.html" width=100% height=550 frameborder="no"></iframe> --- # Leaflet We can use the previously merged london borough shapefile and violent crime data to make an interactive leaflet map like so... ```r library(leaflet) # for interactive mapping pal <- colorNumeric("viridis", NULL) ldn_map_i <- leaflet(ldn_shape) %>% addProviderTiles("CartoDB.Positron") %>% addPolygons( fill = ~rate, fillColor = ~pal(rate), fillOpacity = .6, label = ~glue("<b>{Borough}</b><br>Year: {year}<br>Rate: {round(rate, 2)}") %>% lapply(htmltools::HTML), stroke = TRUE, color = "#FAFAFA", weight = 1, smoothFactor = 0 ) %>% addLegend(pal = pal, values = ~rate, opacity = 1.0, title = "Crime<br>Rate") ``` --- # Leaflet <iframe src="leaflet_example.html" width=100% height=550 frameborder="no"></iframe> --- # Highcharts ```r library(highcharter) # R package for the highcharts javascript library library(treemap) # to load GNI data below data(GNI2014) # world gross national income data # 2-tiered treemap with one line of code treemap <- hctreemap2(GNI2014, c("continent", "iso3"), size_var = "population", color_var = "GNI") ``` <iframe src="hc_example.html" width=100% height=400 frameborder="no"></iframe> --- class: inverse, middle, center # Communication --- # Communication .pull-left[ All your coding is only going to be useful if you can effectively communicate the findings to a broader audience of stakeholders, in formats they are comfortable with. This is where R really excels above other programming languages: - [`rmarkdown`](https://rmarkdown.rstudio.com/) is an authoring format that enables the easy creation of dynamic documents, presentations, and reports from R. It combines the core syntax of markdown (an easy to write plain text format) with embedded R code chunks that are run so their output can be included in the final document - [`Shiny`](https://shiny.rstudio.com/) makes it possible to build fully interactive web-apps and dashboards that puts the analytical and graphical power of R at the fingertips of your users in elegant, browser-based user interfaces ] .pull-right[   ] --- # Rmarkdown Combining narrative and commentary with code in a single document is a very powerful concept. It makes your report fully **reproducible**. That is, a script you can simply re-run whenever your data or code changes and all data-driven R outputs will update automatically in your report. No more copy & pasting data around various MS Office programs!* The output options enables you to share your analysis in the format your audience is most comfortable with, be it: - HTML Report (enables the use of interactive graphics) - HTML Slideshow (this entire slideshow is written in rmarkdown!) - PDF Report - MS Word Document - MS PowerPoint Slides - Dashboards - Websites - [cultureofinsight.com](https://cultureofinsight.com) is written in rmarkdown Having your report as an executable R script also allows you to paramaterise some variables and automate the generation of several different reports - one for each subset of a data set, for example. .footnote[ *[Check out this video](https://www.youtube.com/watch?v=s3JldKoA0zw) for a better illustration of the problem and solution to traditional data reporting methods. ] --- # Rmarkdown <img src="http://r4ds.had.co.nz/rmarkdown/diamond-sizes-report.png" alt="rmarkdown-screenshot" width="70%"/> .center[ Example of the code and output of an html rmarkdown report ] --- # Shiny Shiny goes a step further and allows end-users to interact with a live R session through a user-interface, giving them the power of R without any required knowledge of coding. Shiny dashboards are significantly more powerful than expensive off-the-shelf dashboard software that are limited to visualising the data you supply to them with their own charting libraries. Shiny can draw on the vast eco-system of open-source technology, dynamically run any type of R code you want it to, and feed the results back to the end-user. #### Example Use Case - fetch some live google analytics data - merge it with some internal market research data - run a model to calculate whether a recent marketing campaign drove a significant increase in web traffic - visualise the results to the user in interactive charts - give them the option of downloading an auto-generated rmarkdown PDF report And there's no limitations on the look and feel of your app. There's lots of great CSS themes to choose from, or if you know some HTML + CSS you can build a complety bespoke user interace interface from the ground-up. Check out some of the shiny apps we've made over on the Culture of Insight [portfolio page.](https://cultureofinsight.com/portfolio) --- # Shiny <img src="static/imgs/shiny_example.png" alt="shiny-screenshot" width="70%"/> .center[ Example of the code and output of basic shiny app ] --- class: inverse, middle, center # Learning --- # Learning We hope this has inspired you to start learning some R! We're currently offering a 1-day "Introduction to R" course to get people started on their journey to data programming nirvana. The course will teach you how to import, collate, join, and transform datasets with the tidyverse packages in the RStudio IDE. This will provide the base knowledge you need to: - Liberate you from the constraints of Excel - Elimate human error in your work by automating your data tasks with R scripts - Free-up time to focus on insight, not data wrangling You can extend your training to focus on topics like: - Data visualisation with `ggplot2` - Automated reporting with `rmarkdown` - Web-application development with `shiny` Head over to our [eventbrite page](https://www.eventbrite.co.uk/e/master-data-with-code-tickets-50351709318) to sign up, or [get in touch here](https://cultureofinsight.com/training) if you'd like to know more! --- class: inverse, middle, center, contact-links # Thank you! [cultureofinsight.com](https://cultureofinsight.com/) twitter: [@paulcampbell91](https:/twitter.com/paulcampbell91) github: [paulc91](https://github.com/PaulC91) linkedin: [paul campbell](https://www.linkedin.com/in/paul-campbell-792425123/)